MLOPS: How to operationalize ML models

- Arash Heidarian

- Mar 28, 2022

- 8 min read

Photo by Possessed Photography on Unsplash

Table of contents

Introduction

In short, MLOPS is a DevOps solution for ML models. But despite lot of similarities in terms of concept and terminologies, DevOps and MLOPS are quite different. I have published a separate blog here to show the major difference between MLOPS and DEVOPS. However, if you are not familiar with MLOPS, don't worry, I try to cover major concepts, why we need it and how it works.

Before I start explaining what is MLOPS, first lets have a quick look at classic data science projects. I, as a data scientist prefer to stick to my own principals. Why do I need MLOPS at all? Before answering to that question, let me show you what I mean by classic data science work style.

Classic Data Science

If I want to categorise data science projects based on their outputs, I create two categories namely Result Serving and Model Serving projects. In both types, data scientists are responsible for wide range of tasks when it come to model upgrade. Lets see how these two types of classic DS outputs look like:

Result Serving

Data scientists prepare their scripts and use a version control repo like GitHub for their code and then the model is executed on demand or schedule. As a result, the model outputs are stored in a database, where business can use it to build dashboards or just query the data.

Figure 1 Classic data science: Result Serving

Model Serving

Data scientists prepare their scripts and use a version control repo like GitHub for their code. Then a tool like Flask is used to develop API classes, so the model can be available on a an endpoint URL. Then the model can be executed on demand, when end user sends input data to the endpoint, the model generates the output and sends the result back. Then the results can be used in the app straights away or stored some somewhere to be used for querying or dashboarding. The endpoint can be designed to be used for a single record, on-fly prediction or batch prediction, where more than a single line of input data is sent and more than one result/output is expected.

Figure 2 Classic data science: Model Serving

Data Scientists’ Responsibilities

Here is a very generic scenario: Imagine you are data scientist and are required to build a model to predict store demands for a particular product. You get the data, you clean it, prepare it, build a model and now ready to serve it to business.

After a while, you realize the model is not performing as before (you and the product team detected output drift). You start to investigate, and you find out there is a drift in input distribution, plus, there is an anomaly in the data (a wrong product has been entered into the target product), and a new class in target variable make everything go unusual. So, you upgrade you data preparation codes to deal with similar things in future. You also realize that a tweak to hyper parameters on the model, gives you better result now. After a while, again something new is detected in the input data, you modify the code, and just by a chance, you decide to try the old hyperparameters you used initially, and here we go, that works better again. So, after a while, you realize that not only versioning your code (which you can do it using any repo tool like GitHub) is what you needed, but also versioning the model, and data input also required. So? What version worked fine on what dataset? What model and what hyper parameters were used in those versions? And the headache begins: marketing team and the product team come back to you saying: “we loved the way model was working between January and February, you are a legend, please make the model working like those days, they were pretty accurate” or you may hear “hey dude, can we just a give a little tweak to the model to include another new product? Just one new product, retrain the model, not a biggie” or “can we run the model on two sets of inputs at the same time? we just wanna see which one outperforms the other”. Now I can imagine how you are confused with traveling back and forth into the history of your work, and heavily rely on your memory. Let alone the drift detections and model monitoring which are already in your regular tasks. You also need to test any upgraded model for a while to see if it works fine or not. Some common responsibilities of data scientist are but not limited to:

Model & Data Prep:

Data preparation

Model (re)training

Model testing

Versioning:

Model versioning

Comparing different versions

Serving:

Consuming model and generating model results

Generating endpoint for API call

Making sure results are generated & accessible

Monitoring:

Input drift detection

Model output drift detection

Monitoring endpoint responsiveness

MLOPS

Now it might be easier to understand why MLOPS is the key to untie the mess I explained in classic data science. How? The table below shows how MLOPS helps to facilitate:

Model update & versioning

Dataset versioning

Model testing automation

Model and result serving automation

Testing model and result serving

Data and model drift detection

API responsiveness monitoring

Dedicating required resource

MLOPS lifts lot of responsibilities from data scientists and orchestrates the whole process in three main pipelines, after scripts are developed in Development step. To be realistic, maintaining and upgrading data science models on long term is either impossible without MLOPS, or terribly expensive with regular and high level error and failure.

Figure 3 - MLOPS

Development

This is the preliminary step, which is almost the same as what we explained in classic data science. At this step, data scientists start their development with preparing the scripts for data preparation, train and test the model and any other required steps. The development can be done either on a local machine or on one of cloud MLOPS environment like Azure ML Studio or GCP Vertex AI, where users can have access to Jupyter notebook (or connect their VS code to Azure ML studio resource), to script and try their codes. The codes are pushed into a version control repo. Every time updated scripts or dataset are pushed into the repo, the MLOPS process gets triggered. MLOPS pipelines will access the codes to orchestrate the required tasks. Pipelines explained below can be developed in different ways. I will post another blog on how and where these pipelines are created, for now I just explain what they mean to MLOPS and what they do.

Experiment Pipeline

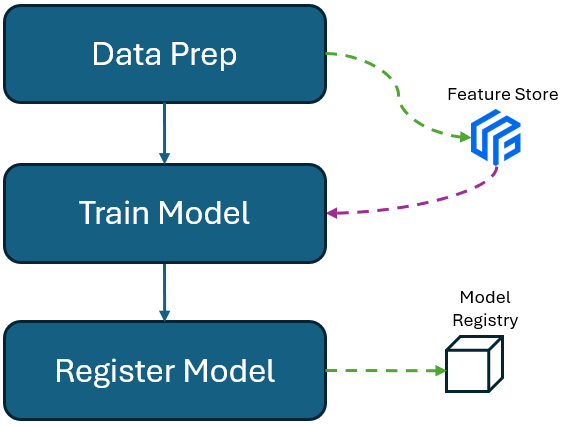

In this pipeline a series of tasks are orchestrated and executed automatically. I explain the tasks briefly:

Data test: in this step, the quality of used dataset is checked to make sure provided dataset coincides with expected format. A group of tests are executed, and finally a report is generated showing how many percent of the tests were passed successfully, and if all tests are passed, the next task will be executed.

Dedicating computing resource: As MLOPS usually rely on cloud resources, in this step a computing resource is dedicated according to what was defined and placed in repo. We can adjust the required resource at any time, depending on our needs. This is done as easy as adjusting few parameters.

Uploading dataset: Once we are sure the dataset is good to be used, we store the dataset into datastore (it depends what platform is used, for instance, datastore in Microsoft Azure would be Blob Storage).

Creating directories: In this step we create an empty directory to keep metadata and model file(s). These files will be used for staging.

Model training: Now its time to train a model. Model training my take seconds to hours, depending on data size, dedicated resource and the model complexity.

Store model & metadata: Once the model is trained, it is stored in the directory we created earlier. The model artifacts are usually stored in ONNX or PKL format. At this stage, we also store metadata. Metadata would keep lot of details about the model like model type, hyper parameters, training/testing/validations size, model accuracy and many other details.

Staging Pipeline

Staging pipeline or testing pipeline, is nothing more than an orchestrated tasks to test the model we trained and make sure it works as expected before moving to production. It usually contains three main tasks:

Dedicating computing resource: Remember, in this step we don’t want to train a model, we want to consume the model we trained at experiment pipeline, to generate scores/predictions/outputs. Hence you need to make sure you dedicate an appropriate machine.

Deploy to endpoint: Now its time to make the model accessible via API call to an endpoint URL. To do this, the model gets downloaded from the directory we created, and it gets assigned to an endpoint for testing.

Testing endpoint and model output: At this stage, the endpoint is tested by sending data (input variables to be consumed by the model). Once the endpoint receives the data, the model generates outputs/scores. Then the outputs are tested, to make sure it is in an expected format. If all tests are passed successfully, we can be sure that its time to move to production phase.

Production Pipeline

Although we can make production pipeline to get triggered automatically right after staging pipeline, it is recommended to trigger this pipeline after approval from a senior member. An approval should be sent out first, then after making sure the tests have been passed successfully at Staging pipeline. Tasks in production are not too different to stage pipeline:

Dedicating Compute Resource: when we want to productionise a model, we need to make sure its high availability and performance is guaranteed. That’s why usually at this stage, a dedicated resource could be from a highly reliable and scalable cloud resources such as Kubernetes.

Deploy to endpoint: Just like in staging pipeline, at this stage, production endpoint URL, serves the model. So the model which was tested on staging endpoint, is now assigned to production endpoint. The new/updated model is now officially available to business and ready to be consumed.

Monitoring: Maybe this is the most unique and valuable feature at MLOPS. In fact, cloud solutions like Azure ML Studio and Google Vertex AI, are equipped with powerful tools to monitor input drifts, output drifts, model availability and responsiveness, traffic monitoring and even access level assignment. If anything goes wrong, these tools can notify users, and meanwhile the tools provide dashboards and graphs.

Versioning

MLOPS tools automate the versioning for us. In fact, ML could solutions automatically version datasets and models for us. We can easily monitor how model performance has been changed over different versions of datasets and model updates.

Data scientists’ responsibilities

As mentioned, several times, MLOPS helps data scientists to have more time to focus on their algorithm developments and analytical works. However preparing MLOPS pipelines and all the clean automations, from a model development to production, requires MLOPS engineer’s skillset. We are not going through the details of MLOPS engineer’s responsibilities in this blog, but the data scientists responsibilities will be more or less limited to prepare scripts for the tasks below:

Data preparation

Model training

Model testing

Data testing

Componentize scripts for orchestrations

Summary

Classic data science approach is good for small and one-off projects. If a model supposed to be used for medium to long term, it means data and eventually models change over the time. Dealing with different versions of models and datasets brings too many complexities and management effort for data science team. On the other hand, data and model drift, data quality check and all security and accessing level concerns, becomes a manual and frustrating routine tasks for data science team. All these concerns can be coped with MLOPS and ML cloud services. MLOPS pipelines can orchestrate lot of manual processes such as proper resource dedication, data testing, model testing and metadata storing. ML cloud solutions also can facilitate dataset and model versioning, drift detection, drift and performance monitoring, failure alarming and access level adjustments.

Comentarios